This is a painting generated by Midjourney, which is a generative AI program that creates art based on natural language input prompts. The painting is called Théâtre D’opéra Spatial, and it gained international attention after it won first prize in the 2022 Colorado State Fair’s annual fine art competition.

The existence of this digital painting is relevant to this summary because it touches on several of the legal issues that surround generative AI as a technology.

Before we get into those issues I want to frame the discussion a bit because I think it’s helpful to know where we are on the historical timeline as far as AI is concerned.

What is the difference between generative AI and the AI technologies that came before it?

Generative AI differs from previous AI technology in a few important ways, but one of those ways is probably the most important. Earlier AI models required structured data to learn – generative AI models do not.

Structured data is labeled data – so, for example, showing an AI model pictures that are labeled as animals to train it to recognize pictures of animals. Unstructured data is just unlabeled and uncategorized data, so for example, a copy of all of Wikipedia.

Alright, with those definitions out of the way, let’s answer the question: “Why now? Why is there a generative AI boom now?”

There are 3 main reasons.

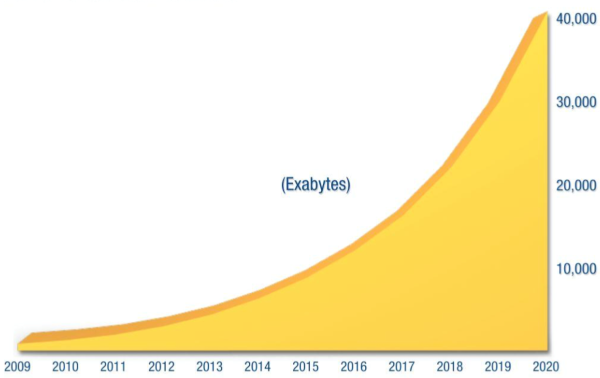

First, in 2013, variational autoencoders, or VAEs, were introduced; these are models that do not require structured data to learn and can be thought of as the forebearers of today’s generative AI models. They have been evolving since their invention, and that evolution was hampered by a couple of things, a lack of data and a lack of computing power.

Second, at some point it was discovered that graphical processing units, or GPUs, are much more efficient at performing the computations demanded by generative AI models than CPUs. On top of that, just like CPUs, GPUs have been advancing in computational power at breakneck speeds. You may have read about NVIDIA and seen their stock price recently – they are in the GPU business so their fortunes rise and fall to a great extent with generative AI. To be clear, GPUs are not categorically “better” than CPUs, but they have different hardware architecture and are designed and manufactured to be good at different types of computing.

The final reason is the increase in the amount and availability of data in the world. Generative AI models, even more so than Discriminative AI models, need huge volumes of data to train on in order to be effective. The current state of the art is the more data the better. You may have heard of “big data.” There is now a subdiscipline in data science called “small data” – which is the study of how to efficiently train generative AI models on smaller quantities of data without sacrificing the effectiveness of the end product.

Alright, I am going to wrap up the background here but before I do I just want to say a few words about why we care that AI models can now learn from unstructured data. We care because the real world is a mess from a data perspective. Hypothetically, if you can fire up an AI model and then feed it, for example, all of the data within a given company’s IT environment, and I mean literally all of it, and then have that model work and be able to provide output, that’s a huge step forward, and that’s the direction a lot of experts think enterprise applications of AI are moving in. What we might see are what are called foundational models, that are trained on huge amounts of data, dropped into business environments and fine-tuned on that environment’s specific data, that are then capable of producing all sorts of interesting insights as output. And this brings us to our first legal issue, which is confidentiality.

Why can generative AI raise confidentiality concerns?

There are two different ways in which generative AI can give rise to confidentiality concerns.

Access to Training Data Provided to Licensor of Model

Foundational models, sometimes called base models, are machine learning models that are trained on absolutely enormous data sets and require an enormous amount of computational power during that training (hence Nvidia’s stock price). The result is a model that is a jack-of-all-trades but arguably a master of none, or at least not practical to use for downstream applications in its raw form. OpenAI’s GPT-n series and Google’s BERT are examples of foundational models. These models require additional training, sometimes called “fine-tuning,” in order to realize their full potential in their target application. That means the foundational model needs a ton of additional information to ingest before it’s ready to be deployed.

The problem that arises is that the information that’s needed for training might be confidential and proprietary and the company that created and possesses the model that needs to be trained is usually different than the company that owns the training information. This should sound like a familiar concern to a lot of you because this was a big issue when cloud computing and SaaS first took off. Even in the context of NDAs or confidentiality provisions some companies get nervous about giving other companies their confidential information.

For many, many downstream applications of this technology that additional training data needs to come from someone other than the company that created and is licensing the foundational model itself.

In addition, sometimes that additional training data is confidential and proprietary. So you have a problem, either the confidential information goes to the model or the model goes to the confidential information. In the latter case, that would mean companies like OpenAI providing copies of GPT-4 to its customers so they can host it locally, fine-tune it, and then build apps on top of it. As it turns out, licensors of foundational models are not doing this. Instead, they are only offering access to their foundational models via API or a web GUI. This means the confidential information must be transmitted to the foundational model licensor which means the licensor has access to the confidential information. Even in the context of an NDA or confidentiality provisions, this makes some companies nervous.

Now, there are a couple of solutions to this nervousness. The first and most obvious is to use data minimization, essentially just omit or minimize confidential information from the training data set. Sometimes this isn’t possible or practical. In that case, a second potential solution is to get possession of a foundational model and use it in-house. There are open-source foundational models out there. Are they as good as GPT-4? No, but like the proprietary foundational models, they are advancing rapidly.

Output from Model Disclosing Confidential Information

The second way generative AI can raise confidentiality concerns is simpler to explain. As mentioned earlier, sometimes confidential information needs to be included as part of the training data set for a given model so that it can achieve its downstream application. Because the training data is unstructured, the model does not know what is confidential and what is not. Accordingly, the model might produce output that contains or is derived from confidential information. If that output is provided to a third party (meaning not the company that fine-tuned the model), that could amount to a leak of proprietary information and/or a breach of confidentiality.

It’s also important to note here that training data doesn’t have to be a big monolithic block of data that is transferred once to a model to train it. Some models are designed to continuously train on end user input prompts, and that raises the same or very similar concerns.

Again, there are solutions to these problems that are operational and technical in nature, rather than legal. First, again, the training data set can omit or minimize confidential information. Second, the training data set could receive pre-processing, such as using software tools to achieve the pseudonymization of personally identifiable information (PII) or the redaction of financial information.

What are the concerns with AI model output and what are hallucinations?

There are two primary concerns associated with output from AI models, one of them legal in nature and the other practical in nature.

Practical Concerns: Hallucinations and Veracity

The first practical concern with generative AI model output is that it’s simply sometimes wrong in a finite way – meaning you could ask it if the sky is blue and its response might be that the sky is orange.

The second practical concern with generative AI model output is closely related to this first and that is that the models will sometimes be wrong in a non-finite way, meaning they will craft entire narratives from whole cloth – literally generating very detailed and complex collections of non-facts that are presented in narrative form.

The problem of hallucinations is likely multicausal. Some hallucinations could be caused by training data sets that contain two different answers to the same question, meaning faulty training data caused the hallucination. Alternatively or in addition, the actual technical methods with which the model was trained might be deficient in some way.

Hallucinations are a very thorny problem in generative AI and a huge focus of ongoing research.

The solution to issues with veracity and hallucinations is pretty simple: a human should always carefully review and edit the output from a text-based AI model before using that output in most applications. Human review is an element that is common to every set of AI best practices ever published, at least that I’ve seen. And it’s worth noting that human review is not a new thing – before generative AI a good researcher would read several articles online about the same subject prior to drafting one of their own. That practice helps avoid factual inaccuracies and should be preserved when conducting research using generative AI technologies.

Legal Concerns: Copyright, Plagiarism

First, some definitions because it’s been a long time since sitting in an English class. Plagiarism is an ethical construct, copyright is a legal construct. Plagiarism is stealing ideas or a small amount of content from another and presenting them as your own. In the case of text, for example, this would be the absence of quotation marks on maybe a single sentence or a few sentences coupled with the absence of a citation or attribution. Plagiarism is dishonest but not “illegal.” It describes a situation where a small amount of content is stolen and the manner in which it was used is unlikely to harm the original author’s financial interest in their work. While not illegal, plagiarism is unethical and there are real-world consequences, depending on the context. For example, a college student might be expelled, a reporter might be fired, or a blogger might have their reputation irreparably sullied.

It is possible to plagiarize without infringing copyright, to infringe copyright without plagiarizing, and to do both simultaneously. AI models that output natural language have been found to do all three of these things.

Why would AI models plagiarize or infringe copyright?

There are two main reasons for plagiarism and copyright infringement by AI models.

The training data is bad

As mentioned earlier, in order to train a foundational model for natural language output you need a huge amount of text, and the higher quality the text the better. If any of the text in that training data is copyrighted, you’d need a license to use the text for your intended use – or at least in theory you would, and exactly how that plays out is the subject of pending litigation.

As it turns out, some of the generative AI models that have been trained and deployed in commerce were trained on copyrighted text or art without a license.

For example, one of the copyright lawsuits in the generative AI space alleges that: Bibliotik is a website that hosts pirated content. At one time it was hosting an 886GB dataset known as Pile. The Pile dataset, in turn, contains a dataset known as books3. Books3 was composed of approximately 200,000 books in .txt format, many of which were under copyright. The Pile dataset was a component in the training data used to train a large family of foundational models by Meta Platforms Inc.

Another example is the Théâtre D’opéra Spatial – the Midjourney model that created it was trained on proprietary artwork and its makers are being sued for copyright infringement.

These are both examples of a situation where the input is copyrighted (allegedly).

The training process is bad

There are also pending cases where the output is copyrighted (allegedly). For example, there is a class action pending against Microsoft and OpenAI because their tool, Copilot, which is designed to aid software engineers in writing source code, has been known to output large amounts of (allegedly) copyrighted code, verbatim.

Is it true that hackers and bad actors are exploiting generative AI?

Yes, it is. The FBI has issued warnings directly to the public as well as to journalists relating to three malicious use cases of generative AI:

First, bad actors are using AI image generators to create both still image and video-based “deepfakes” of individuals that are sexually explicit. The bad actors then use this content to extort or attempt to extort victims.

Second, bad actors are using AI voice generators to mimic the voice and speech patterns of people who are adjacent to their target in some way, such as a loved one or boss. These tools are then used to trick the target into taking some action, such as transferring money. The elderly, in particular, have been the subject of these types of attacks.

Third, bad actors are hacking chatbots to disable their content filters. Most chatbots have controls on them that prevent them from generating output that would be used for a nefarious purpose. For example, if you ask ChatGPT to generate a phishing email, it will decline to do so. Hackers have figured out ways to disable these filters in ChatGPT and other chatbots. They then use these chatbots for nefarious purposes, including drafting phishing emails and generating or improving malware. While the creation of malware has been a problem for ages, the concerns voiced by the FBI are that generative AI tools are making the creation or improvement of malware much easier and less technically-demanding, thereby increasing the “supply” of malware.

What is going on with AI laws?

Very briefly I’ll touch on new laws around AI. We’ll start with the U.S. – there are no federal laws but at the state level there are literally about 100 bills pending in U.S. state legislatures relating to AI. Not all of them relate to generative AI – for example one bill I read about would prohibit the use of any AI in analyzing an end user’s behavior to customize an online gambling website to make it more addictive for that particular end user. These bills are all over the map in terms of their subject matter and many of them were written as a response to the generative AI boom.

In Europe we now have the EU AI Act: it passed in June 2023. It is the first legislation of its kind in the world and broadly speaking it bans applications of AI that are risky and puts controls on less risky applications. In a nutshell it classifies AI use cases into 3 different buckets based on their potential to cause harm: banned practices, high-risk systems, and other AI systems. I won’t get into all of the details but the important thing to note is that most software and SaaS companies’ use will probably fall into the third bucket, the “other AI systems” which the Act specifically does NOT regulate.

Last, I’ll just mention that Italy famously banned ChatGPT at one point but has since lifted the ban. The ban came from privacy and end user consent concerns which Italy feels OpenAI has now addressed.

What about bias and discrimination in AI?

Bias in AI is not a new thing but it’s gained renewed attention with the generative AI boom. For example, back in 2016 there was a company called Northpointe which was marketing software to criminal justice systems that could take as input a bunch of data about a given prisoner and then used an AI model to assign a percentage chance that the prisoner in question would commit another crime if they were let out on parole. Even though the input data did not include a categorization on race or ethnicity it was discovered that the AI model was grossly and erroneously overestimating recidivism rates of a certain race and underestimating recidivism of other races. Plenty of other examples of bias have been discovered in other AI models.

There are a few key takeaways from this in the legal realm. First, most of the articles I’ve read on the subject cited experts who were theorizing that the bias the models were exhibiting was due to bias in the training data itself, rather than some sort of inherent bias in the programming of the model itself. Second, it’s important to understand that the models, even though they can’t reason or use logic, are highly adept at spotting patterns – so, in this realm they are capable of observing correlations between things – one example I read was of an AI model that wasn’t being fed a human’s gender as an input but nonetheless “deduced” it based on the fact that the human went to an all-women’s college in the U.S. The third thing to be aware of is that using literally any AI in the human resources context should make your antennae go up for obvious reasons and is something we should be very wary of.

Having said that, AI-powered resume screening tools are absolutely ordinary course business today and have been around for a while. For example, SmartRecruiters has this functionality. The 2 things to watch out for in this context are tools that take a first pass through resumes to remove indicators of age, gender, race etc. before feeding the resume into the model and also that the tool does not train on past hiring decisions – SmartRecruiter’s AI feature meets both of these criteria (not associated with them, just using as an example).

So, to sum up – bias in the AI context, 2 things to remember: (1) it’s probably due to flaws in the training data and (2) AI models are incredibly good at spotting patterns, including patterns humans are incapable of spotting, so very subtle or very slight bias in a dataset absolutely will get picked up by an AI model and this represents a thorny technical problem that has not been overcome. Sam Altman, CEO of OpenAI, has stated publicly that he thinks bias in AI models can be eliminated and is a technical problem.